Introduction

I’m a developer. During development applications perform less than optimal:

- algorithms need tweaking for real world usage

- debuggers eat performance

- measuring speed eats performance

Anything that happens in-memory, without memory paging, is relatively “cheap”. Every operation that needs, or indirectly causes, disk access is expensive. In the hard disk market, (larger) SSD drives become more and more affordable. In terms of performance (not purchase) they’re already cheaper than conventional hard drives.

Recently, I tried out what this means for me, with two applications in mind that require almost constant disk access:

- virtual machine storage

- database storage

This is the test setup:

- Simple HP Elite 7300 i3-2120 (3.30 GHz), SATA 3 Gb/s

- 12 GB DDR3 @ 1333 MHz

- Windows 10 Technical preview on a Hitachi 250 GB drive

- 2x Samsung Evo 850 – 250 GB available

- Virtual machine: IE 11 on Windows 8.1 (from https://www.modern.ie/)

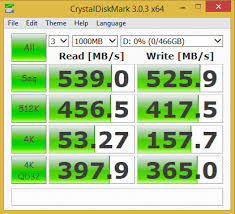

The Samsung Evo 850 – 250 GB has a theoretical CrystakDiskMark read throughput of about 539 MB/s.

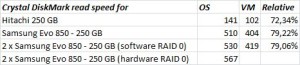

I measured pure OS troughput vs. CrystalDiskMark within the virtual machine. For a single Samsung 850, 510 MB/s is acceptable. I then tried a RAID 0 setup. I know, a striped disk array is lost if one of the drives experiences hardware failure. But with regular backups, it poses an acceptable risk (to me). These are the complete test results:

Conclusion

- The performance gain compared to convential disks is astronomical.

- The performance gain through RAID 0 is marginal.

- In a performance/reliablity trade-off, I’d choose a to run separate disks (for instance: OS and VM storage) over RAID-0.

- Virtual machine disk performance is surprisingly acceptable at 70~80 percent.

![[Cobble banner]](/images/banner.png)